Runway Gen-3 Alpha

Gen-3 Alpha, built on entirely new infrastructure, introduces an array of fresh features and improvements in terms of understanding complicated prompts and creating lifelike clips with the most authentic movement, detail, and physics. Try Gen-3 Alpha for free here!

Key Features

- Superior Video Output: Gen-3 Alpha can create cinematic-level video output, with detailed and lifelike visuals in each frame.

- Act One Animator: Animate still character images with realistic, consistent movements.

- Video Extender: Use this to make your videos longer, by up to 10 seconds of fresh content with each use.

- High-Fidelity Output: Gen-3 Alpha videos are more consistent, fluid, and rich, resulting in more natural visuals across the board.

- Advanced Temporal Controls: Make incredibly unique and dynamic transitions between scenes, using only text prompts.

- Lifelike People: Humans look better than ever in Gen-2, with the most authentic movements and reactions.

- Lip Syncing Audio: This allows you to sync up the audio of people speaking with accurate lip movements of video subjects.

- Video to Video: Use a video as your baseline and transform it in various ways.

Superior Video Output

Runway's Gen-3 Alpha model has been extensively trained using both video and image-based content to become smarter than the prior version. It offers three forms of AI generation: Text to Video, Image to Video, and Text to Image. And it brings exciting features to the table, like Motion Brush, Advanced Camera Controls, and Director Mode.

It allows users to create highly detailed videos with complex scene changes, a wide range of cinematic choices, and detailed art directions.

| Prompt | Input Image | Output video |

| High-speed, dynamic angle, the camera locks onto a plastic bag floating through the air across a sandy scene. The bag is semi-transparent and floats up and down on the breeze, but remains clearly visible and in focus throughout the scene. | None |

|

| The gloved hands pull to stretch the face made of a bubblegum material |

|

|

| The sea anemones sway and flow naturally in the water. the camera remains still. |

|

|

Act One

Act One is one of the most exciting additions to the Gen-3 Alpha version of Runway. It's a character animation tool, aimed at producing the most realistic, authentic facial movements, speech patterns, and expressions in human subjects.

With this, users can create their own character performances that look just like the real thing, and it works in various styles, from photorealistic videos of people talking to cute animated scenes in the styles of major animation studios, like Disney and Pixar.

It works so well because the tool was trained on reams of facial animation data and mocap, opening lots of new opportunities for creative expression.

| Driving performace | Output Video |

|

|

|

|

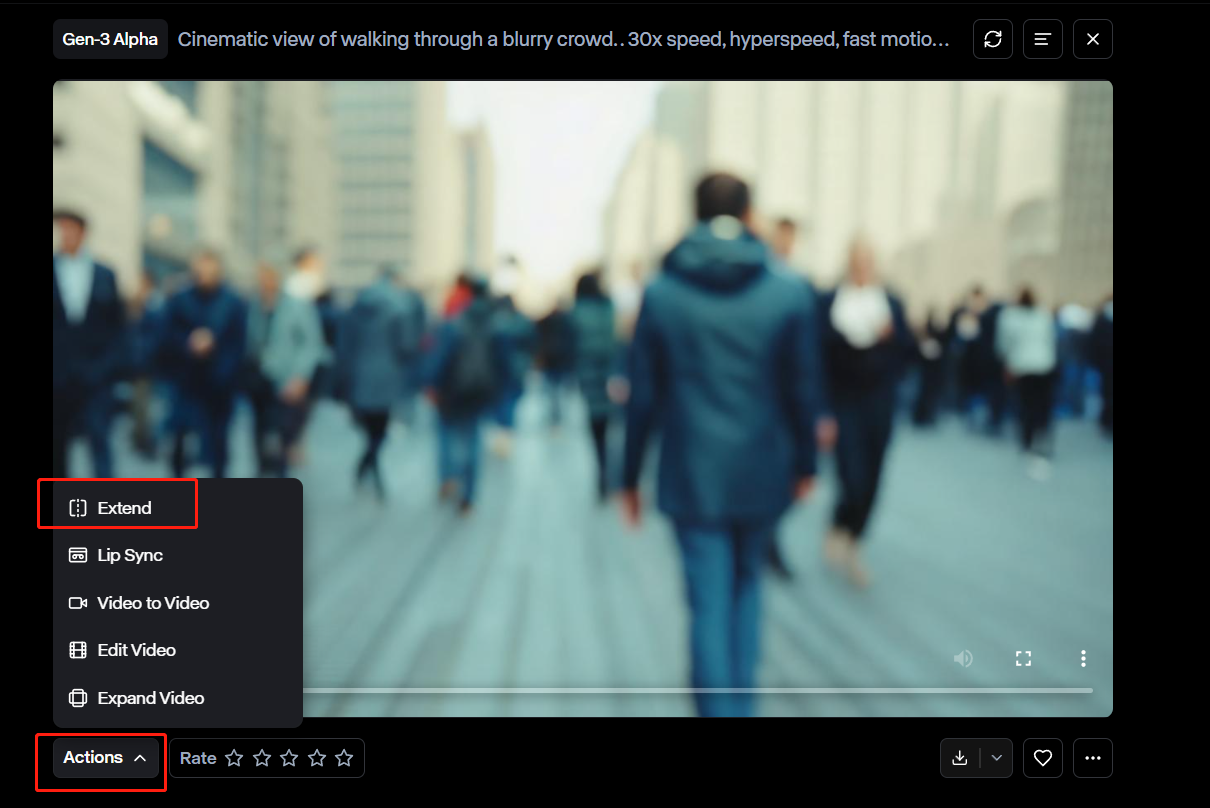

Extend Video

With Gen-3 Alpha, you can make your AI-generated videos longer than before, adding new sections of content in 5-10 second increments. And you can extend a single video up to three times to make it much longer than it originally was (up to a max of 40 extra seconds).

The process of extending videos is also quick and easy – you just have to select the "Extend" tool in the "Actions" menu beside your video.

By default, the tool will add on new content following the final frame of your original video. And you can enter your own text prompt to tell the AI model what to include in the extension, describing the scene, the camera movement, and other factors.

Gen-3 Alpha also lets you choose between five second or 10 second additions, as desired.

| Original Video | Extened Video |

High-Fidelity Output

Runway's Gen-3 Alpha takes consistency and high-fidelity output to new levels when compared to previous versions, like Gen-2.

It's able to create videos depicting the most authentic, lifelike movements, including the likes of walking, running, jogging, and jumping. Each movement flows neatly and realistically, without weird glitches or other AI obscurities, keeping the audience engaged and immersed.

What's more, the new model is able to maintain consistent movement and visuals from frame to frame. This is thanks to its superior understanding of prompts and better algorithms than the prior Gen-2 version.

All of this is also delivered faster than ever – Gen-3 Alpha is 2x faster than Gen-2.

Advanced Temporal Controls

Runway's Gen-3 Alpha model has been trained to comprehend complex, layered prompts that involve multiple scenes and temporal ideas all wrapped together. Because of that, it's very good at understanding prompts that describe scenes or visuals which go through various changes or transitions.

For the end user, what this means is that Gen-3 Alpha does an efficient job of generating smooth, fluid transitions between scenes or segments of each video. It also gives you more control over key-framing, so you can specifically set precise moments of the video where specific things occur or elements appear.

With so much control, users can make the exact videos they want, without having to compromise.

Lifelike People

Runway's Gen-3 Alpha has been trained to make its human subjects look as realistic as they possibly can. In other words, humans in these AI videos are very hard to tell apart from real people.

Whether they're talking, making facial expressions, or performing various activities like running and jumping, the people in these videos are highly authentic. This gives users a lot of possibilities in terms of telling stories and making human-oriented content.

Lip Syncing Audio

The "Lip Sync" feature lets you synchronize an audio track or speech with realistic lip movements of your characters and subjects.

You can type out your own script for your AI characters to say, record your own voice directly in Runway, or upload an audio file. Lip Sync will then sync it up with the character's mouth movements, so it really looks like they're delivering that audio.

There are also various voice options to configure and choose between when you use Lip Sync.

Video to Video Generation

SImply upload (or generate) a video to use as the baseline or reference point for the AI model. It will then let you transform or change that video in various ways, such as turning a realistic video into an animated one, or vice versa.

| Input Video | Text prompt | Output Video |

|

3D halftone CMYK style. halftone print dot. comic book. vibrant colors in layers of cyan blue, yellow, magenta purple, and black circular dots. |

|

Best Prompts for Using Runway Gen-3 Alpha

To get the most out of Gen-3 Alpha, you need to use the best kinds of prompts. Runway itself encourages users to stick to the "[camera movement]: [establishing scene]. [additional details]." formula.

For example, you could put in a prompt like "slow horizontal pan, a young woman walks through the desert, it then begins to rain."

The more detail you can put into your prompts, the more specific and tailored results you'll get. So, if you have a certain vision in mind of how you want your video to look, it's best to be detailed.

We can apply this to the previous example, to get something like:

"The camera pans slowly from left to right across a desert of golden sand. A woman in her mid-20s walks slowly across the scene. She's wearing a floral dress. She looks up as rain starts to fall from grey clouds above."

Here are a few bonus tips to make your prompts the best they can be:

- Provide as much detail as you can, without going overboard. Gen-3 Alpha works best with "sweet spot" prompts that aren't excessively simple or complex.

- Learn about terms for camera movements and shot types, so you can give the tool precise instructions for framing and camera angles.

- Use the same style in your prompts when creating similar or follow-on scenes so that they maintain a level of consistency.

- Try playing around with Runway's own custom preset prompts and save your favorite ones to reuse in the future.

Runway Gen-2 vs Gen-3 Alpha vs Gen-3 Alpha Turbo

| Features | Gen-2 | Gen-3 Alpha | Gen-3 Alpha Turbo |

| Video Duration | 4s | 5/10s | |

| Video Resolution | 1408 × 768px (Upscale off)

2816 × 1536px (Upscale on) |

1280x768px | 1280x768px

768x1280px |

| Text to Video | |||

| Image to Video | |||

| Video to Video | |||

| Motion Brush | |||

| Camera Control | |||

| Custom Styles | |||

| Lip Sync | |||

| Act-One | |||

| Expand Video | |||

Reddit Reviews of Gen-3 Alpha

So, what do general users think about Runway's Gen-3 Alpha? Well, at this time, opinions on the tool are somewhat mixed.

Some users have claimed that they're going to stick with using Gen-2 for now, since Gen-3 is only in the "Alpha" stage of development and still needs some improvements before it fulfills its potential.

Comment

by u/West_Persimmon_6210 from discussion

in runwayml

Others have complained about the high cost of using Gen-3 Alpha, but some have still praised the technology and key features it has introduced.

Comment

by u/Puzzled-Emphasis1116 from discussion

in runwayml

Video Reviews on YouTube

More Articles About Runway Gen-3 Alpha

Delve into more articles on Runway Gen-3 Alpha to enhance your understanding of this model!

FAQs

What is gen 3 alpha of Runway?

Gen-3 Alpha is the newest version of the Runway AI video generative model. It introduces a raft of improvements and new features compared to the previous Gen-2 version, such as the Lip Sync feature.

What is the difference between Gen-3 Alpha and Gen-3 Alpha Turbo?

Gen-3 Alpha and Gen-3 Alpha Turbo have some differences in terms of their efficiency and cost. Namely, Gen-3 Alpha Turbo is much faster than regular Gen-3 Alpha when it comes to creating content. Turbo also costs less as it takes fewer credits to generate videos, but standard Alpha may give you more reliable results.

How is Gen-3 Alpha different from Gen-2 Text/Image to Video?

Gen-3 Alpha brings various improvements and new features compared to Gen-2. It delivers better quality videos that are more consistent and more realistic in terms of motion and physics.

How to try gen-3 alpha?

You can try Gen-3 Alpha for free by signing up to Runway or using another tool where the Runway Gen-3 Alpha model is available, like Pollo AI. You don't have to spend any money to try this tool.

How to use Gen3 alpha?

Gen-3 Alpha works a lot like many other AI video generators out there. You can choose to either upload an image to use as a baseline for creating AI videos, or type in a prompt and configure the various settings to control your output.

Is Runway Gen-3 free?

Not entirely, but you can use it for free by signing up to the Basic/Free plan with RunwayML. However, this will only give you limited access to the platform. If you want full access, you'll need to sign up to a paid plan.

What is the use of Gen 3 Alpha?

Gen-3 Alpha is an AI video generative model that you can use to make realistic, high quality AI videos. There are lots of possible applications for this, such as creating entertainment content, adverts, demonstrations, tutorials, and more.

How many credits does Runway Gen 3 take?

At this time, it costs 10 credits per second when creating AI videos with Gen-3 Alpha. So, a 10 second clip will cost 100 credits to make, for example. You can get videos a little cheaper by using the Turbo version of Gen-3 Alpha, which only costs 5 credits per second.

What is the maximum length of Gen-3 Alpha generations?

At this time, Gen-3 Alpha can make clips of up to 10 seconds in length. However, you can use the Extend feature up to a maximum of three times to add a total of 30 seconds to your clips, making them a maximum of 40 seconds long.

What is the resolution of Gen 3 Alpha video?

The resolution of videos generated with Gen-3 Alpha (and Gen-3 Alpha Turbo) is currently set at 720p. But the Runway team has stated that more options will be released in the future.

How much does Runway Gen-3 cost?

Runway uses a credit system, and it costs 10 credits for every second of video you make with Gen-3 Alpha. There are various subscription plans you can sign up for that give you different amounts of credits, including a free plan which gives you 125 credits and a Pro plan which gives you 2250 credits monthly.